By Philip Russom, TDWI Research Director

[NOTE -- My new TDWI report “Integrating Hadoop into Business Intelligence (BI) and Data Warehousing (DW)” (Hadoop4BIDW) is finished and will be published in early April. I will broadcast the report’s Webinar on April 9, 2013. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #Hadoop4BIDW, #Hadoop, and #TDWI to find other leaks. Enjoy!]

This report considers Hadoop an ecosystem of products and technologies. Note that some are more conducive to applications in BI, DW, DI, and analytics than others; and certain product combinations are more desirable than others for such applications.

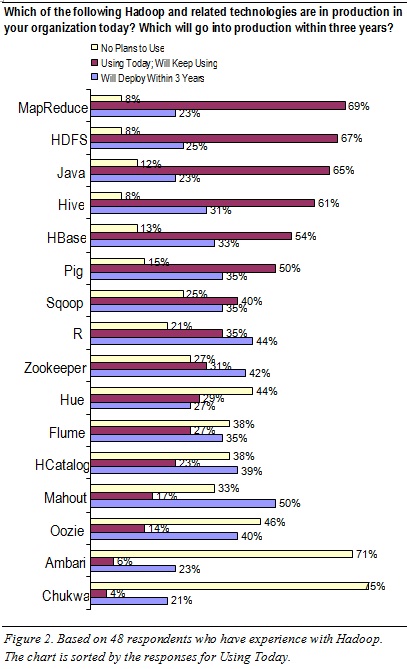

To sort out which Hadoop products are in use today (and will be in the near future), this report’s survey asked: Which of the following Hadoop and related technologies are in production in your organization today? Which will go into production within three years? Which will you never use? (See Figure 2 above.) These questions were answered by a subset of 48 survey respondents who claim they’ve deployed or used HDFS. Hence, their responses are quite credible, being based on direct hands-on experience.

HDFS and a few add-ons are the most commonly used Hadoop products today. HDFS is near the top of the list (67% in Figure 2), because most Hadoop-based applications demand HDFS as the base platform. Certain add-on Hadoop tools are regularly layered atop HDFS today:

- MapReduce (69%). For the distributed processing of hand-coded logic, whether for analytics or for fast data loading and ingestion

- Hive (60%). For projecting structure onto Hadoop data, so it can be queried using a SQL-like language called HiveQL

- HBase (54%). For simple, record-store database functions against HDFS’ data

MapReduce is used even more than HDFS. The survey results (which rank MapReduce slightly more common than HDFS) suggest that a few respondents in this survey population are using MapReduce today without HDFS, which is possible, as noted earlier. The high MapReduce usage also explains why Java and R ranked fairly high in the survey; these programming languages are not Hadoop technologies per se, but are regularly used for the hand-coded logic that MapReduce executes. Likewise, Pig ranked high in the survey, being a tool that enables developers to design logic (for MapReduce execution) without having to hand-code it.

Some Hadoop products are rarely used today. For example, few respondents in this survey population have touched Chukwa (4%) or Ambari (6%), and most have no plans for using them (75% and 71%, respectively). Oozie, Hue, and Flume are likewise of little interest at the moment.

Some Hadoop products are poised for aggressive adoption. For example, half of respondents (50%) say they’ll adopt Mahout within three years, with similar adoption projected for R (44%), Zookeeper (42%), HCatalog (40%), and Oozie (40%).

TDWI sees a few Hadoop products as especially up-and-coming. Usage of these will be driven up according to user demand. For example, users need analytics tailored to the Hadoop environment, as provided by Mahout (machine-learning based recommendations, classification, and clustering) and R (a programming language specifically for analytics). Furthermore, BI professionals are accustomed to DBMSs, and so they long for a Hadoop-wide metadata store and far better tools for HDFS administration and monitoring; these user needs are being addressed by HCatalog and Ambari, respectively, and therefore TDWI expects both to become more popular.

Want more? Register for my Hadoop4BIDW Webinar, coming up April 9, 2013 at noon ET: http://bit.ly/Hadoop13

Posted by Philip Russom, Ph.D. on March 15, 20130 comments

By Philip Russom, TDWI Research Director

[NOTE -- My new TDWI report “Integrating Hadoop into Business Intelligence (BI) and Data Warehousing (DW)” (Hadoop4BIDW) is finished and will be published in early April. I will broadcast the report’s Webinar on April 9, 2013. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #Hadoop, #TDWI and #Hadoop4BIDW to find other leaks. Enjoy!]

The Hadoop Distributed File System (HDFS) and other Hadoop products show great promise for enabling and extending applications in BI, DW, DI, and analytics. But are user organizations actively adopting HDFS?

To quantify this situation, this report’s survey asked: When do you expect to have HDFS in production? (See Figure 1.) The question asks about HDFS, because in most situations (excluding some uses of MapReduce) an HDFS cluster must first be in placed before other Hadoop products and hand-coded solutions are deployed atop it. Survey results reveal important facts about the status of HDFS implementations. A slight majority of survey respondents are BI/DW professionals, so the survey results represent the broad IT community, but with a BI/DW bias.

-

HDFS is used by a small minority of organizations today. Only 10% of survey respondents report having reached production deployment.

-

A whopping 73% of respondents expect to have HDFS in production. 10% are already in production, with another 63% upcoming. Only 27% of respondents say they will never put HDFS in production.

-

HDFS usage will go from scarce to ensconced in three years. If survey respondents’ plans pan out, HDFS and other Hadoop products and technologies will be quite common in the near future, thereby having a large impact on BI, DW, DI, and analytics – plus IT and data management in general, and how businesses leverage these.

Figure 1. Based on 263 respondents: When do you expect to have HDFS in production?

10% = HDFS is already in production

28% = Within 12 months

13% = Within 24 months

10% = Within 36 months

12% = In 3+ years

27% = Never

Hadoop: Problem or Opportunity for BI/DW?

Hadoop is still rather new, and it’s often deployed to enable other practices that are likewise new, such as big data management and advanced analytics. Hence, rationalizing an investment in Hadoop can be problematic. To test perceptions of whether Hadoop is worth the effort and risk, this report’s survey asked: Is Hadoop a problem or an opportunity? (See Figure 3.)

-

The vast majority (88%) consider Hadoop an opportunity. The perception is that Hadoop products enable new applications types, such as the sessionization of Web site visitors (based on Web logs), monitoring and surveillance (based machine and sensor data), and sentiment analysis (based on unstructured data and social media data).

-

A small minority (12%) consider Hadoop a problem. Fully embracing multiple Hadoop products requires a fair amount of training in hand-coding, analytic, and big data skills that most BI/DW and analytics teams lack at the moment. But (at a mere 12%) few users surveyed consider Hadoop a problem.

Figure 3. Based on 263 respondents: Is Hadoop a problem or an opportunity?

88% = Opportunity – because it enables new application types

12% = Problem – because Hadoop and our skills for it are immature

Want more? Register for my Hadoop4BIDW Webinar, coming up April 9, 2013 at noon ET: http://bit.ly/Hadoop13

Posted by Philip Russom, Ph.D. on March 8, 20130 comments

Blog by Philip Russom

Research Director for Data Management, TDWI

To help you better understand High-Performance Data Warehousing (HiPerDW) and why you should care about it, I’d like to share with you the series of 34 tweets I recently issued. I think you’ll find the tweets interesting, because they provide an overview of HiPerDW in a form that’s compact, yet amazingly comprehensive.

Every tweet I wrote was a short sound bite or stat bite drawn from my recent TDWI report on HiPerDW. Many of the tweets focus on a statistic cited in the report, while other tweets are definitions stated in the report.

I left in the arcane acronyms, abbreviations, and incomplete sentences typical of tweets, because I think that all of you already know them or can figure them out. Even so, I deleted a few tiny URLs, hashtags, and repetitive phrases. I issued the tweets in groups, on related topics; so I’ve added some headings to this blog to show that organization. Otherwise, these are raw tweets.

Defining High-Performance Data Warehousing (#HiPerDW)

1. The 4 dimensions of High-Performance Data Warehousing (#HiPerDW): speed, scale, complexity, concurrency.

2. High-performance data warehousing (#HiPerDW) achieves speed & scale, despite complexity & concurrency.

3. #HiPerDW 4 dimensions relate. Scaling requires speed. Complexity & concurrency inhibit speed & scale.

4. High-performance data warehousing (#HiPerDW) isn't just DW. #BizIntel, #DataIntegration & #Analytics must also perform.

5. Common example of speed via high-performance data warehousing (#HiPerDW) = #RealTime for #OperationalBI.

6. A big challenge to high-performance data warehousing (#HiPerDW) = Scaling up or out to #BigData volumes.

7. Growing complexity & diversity of sources, platforms, data types, & architectures challenge #HiPerDW.

8. Increasing concurrency of users, reports, apps, #Analytics, & multiple workloads also challenge #HiPerDW.

HiPerDW Makes Many Applications Possible

9. High-performance data warehousing (#HiPerDW) enables fast-paced, nimble, competitive biz practices.

10. Extreme speed/scale of #BigData #Analytics requires extreme high-performance warehousing (#HiPerDW).

11. #HiPerDW enables #OperationalBI, just-in-time inv, biz monitor, price optimiz, fraud detect, mobile mgt.

HiPerDW is An Opportunity

12. #TDWI SURVEY SEZ: High-performance data warehousing (#HiPerDW) is mostly opportunity (64%); sometimes problem (36%).

13. #HiPerDW is an opportunity because it enables new, broader and faster data-driven business practices.

14. #TDWI SURVEY SEZ: 66% say High Perf #DataWarehousing (#HiPerDW) is extremely important. 6% find it a non-issue.

15. #TDWI SURVEY SEZ: Most performance improvements are responses to biz demands, growth, or slow tools.

New Options for HiPerDW

16. Many architectures support High-Perf #DataWarehousing (#HiPerDW): MPP, grids, clusters, virtual, clouds.

17. #HiPerDW depends on #RealTime functions for: streaming data, buses, SOA, event processing, in-memory DBs.

18. Many hardware options support #HiPerDW: big memory, multi-core CPUs, Flash memory, solid-state drives.

19. Innovations for Hi-Perf #DataWarehousing (#HiPerDW) = appliance, columnar, #Hadoop, #MapReduce, InDB #Analytics.

20. Vendor tools are indispensible, but #HiPerDW still requires optimization, tweaks & tuning by tech users.

Benefits and Barriers for HiPerDW

21. #TDWI SURVEY SEZ: Any biz process or tech that’s #Analytics, #RealTime or data-driven benefits from #HiPerDW.

22. #TDWI SURVEY SEZ: Biggest barriers to #HiPerDW are cost, tool deficiencies, inadequate skills, & #RealTime.

Replacing DW to Achieve HiPerDW

23. #TDWI SURVEY SEZ: 1/3 of users will replace DW platform within 3 yrs to boost performance. #HiPerDW

24. #TDWI SURVEY SEZ: Top reason to replace #EDW is scalability. Second reason is speed. #HiPerDW

25. #TDWI SURVEY SEZ: The number of analytic datasets in 100-500+ terabyte ranges will triple. #HiPerDW

HiPerDW Best Practices

26. #TDWI SURVEY SEZ: 61% their top High-Performance DW method (#HiPerDW) is ad hoc tweaking & tuning.

27. #TDWI SURVEY SEZ: Bad news: Tweaking & tuning for #HiPerDW keeps developers from developing.

28. #TDWI SURVEY SEZ: Good news: Only 9% spend half or more of time tweaking & tuning for #HiPerDW.

29. #TDWI SURVEY SEZ: #HiPerDW methods: remodeling data, indexing, revising SQL, hardware upgrade.

30. BI/DW team is responsible for high-performance data warehousing (#HiPerDW), then IT & architects.

HiPerDW Options that will See Most Growth

31. #HiPerDW priorities for hardware = server memory, computing architecture, CPUs, storage.

32. #TDWI SURVEY SEZ: In-database #Analytics will see greatest 3-yr adoption among #HiPerDW functions.

33. #TDWI SURVEY SEZ: Among High-Perf #DataWarehouse functions (#HiPerDW), #RealTime ones see most adoption.

34. #TDWI SURVEY SEZ: In-memory databases will also see strong 3-yr growth among #HiPerDW functions.

FOR FURTHER STUDY:

For a more detailed discussion of High-Performance Data Warehousing (HiPerDW) – in a traditional publication! – see the TDWI Best Practices Report, titled “High-Performance Data Warehousing,” which is available in a PDF file via download.

You can also register for and replay my TDWI Webinar, where I present the findings of the TDWI report on High-Performance Data Warehousing (HiPerDW).

If you're not already, please follow me as @prussom on Twitter.

Posted by Philip Russom, Ph.D. on October 26, 20120 comments

By Philip Russom, TDWI Research Director

[NOTE -- My new TDWI report about High-Performance Data Warehousing (HiPer DW) is finished and will be published in October. The report’s Webinar will broadcast on October 9, 2012. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #HiPerDW to find other leaks. Enjoy!]

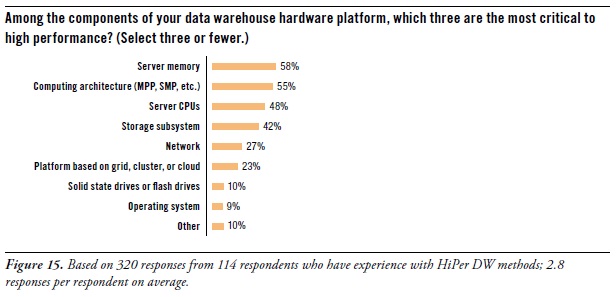

Let’s focus for a moment on the hardware components of a data warehouse platform. After all, many of the new capabilities and high performance of data warehouses come from recent advances in computer hardware of different types. To determine which hardware components contribute most to HiPer DW, the survey asked: “Among the components of your data warehouse hardware platform, which three are the most critical to high performance?” (See Figure 15. [shown above])

You may notice that the database management system (DBMS) is omitted from the list of multiple answers for this question. That’s because a DBMS is enterprise software, and this question is about hardware. However, let’s note that – in other TDWI surveys – respondents made it clear that they find the DBMS to be most critic component of a DW platform, whether for high performance, data modeling possibilities, BI/DI tool compatibility, in-database processing logic, storage strategies, or administration.

Performance priorities for hardware are server memory, computing architecture, CPUs, and storage.

Server memory topped respondents’ lists as most critical to high performance (58% of survey respondents). Since 64-bit computing arrived ten years ago, data warehouses (like other platforms in IT) have migrated away from 32-bit platform components, mostly to capitalize on the massive addressable memory spaces of 64-bit systems. As the price of server memory continues to drop, more organizations upgrade their DW servers with additional memory; 256 gigabytes seems common, although some systems are treated to a terabyte or more. To a lesser degree, users are also upgrading ETL and EBI servers. “Big memory” speeds up complex SQL, joins, and analytic model rescores due to less I/O to land data to disk.

Computing architecture (55%) also determines the level of performance. In other TDWI surveys, respondents have voiced their frustration at using symmetrical multi-processing systems (SMP), which were originally designed for operational applications and transactional servers. The DW community definitely prefers massively parallel processing (MPP) systems, which are more conducive to the large dataset processing of data warehousing.

Server CPUs (48%) are obvious contributors to HiPer DW. Moore’s Law once again takes us to a higher level of performance, this time with multi-core CPUs at reasonable prices.

We sometimes forget about storage (42%) as a platform component. Perhaps that’s because so many organizations now have central IT departments that provide storage as an ample enterprise resource, similar to how they’ve provided networks for decades. The importance of storage grows as big data grows. Luckily, storage has kept up with most of the criteria of Moore’s Law, with greater capacity, bandwidth, reliability, and capabilities, while also dropping in price. However, disk performance languished for decades (in terms of seek speeds), until the recent invention of solid-state drives, which are slowly finding their way into storage systems.

USER STORY -- Caching OLAP cubes in server memory provides high-performance drill down. “Within our enterprise BI program, we have business users who depend on OLAP-based dashboards for making daily strategic and tactical decisions,” said the senior director of BI architecture at a media firm. “To enable drill down from management dashboards into cube details, we maintain cubes in server memory, and we refresh them daily. We’ve only been doing this a few months, as part of a pilot program. The performance is good, and we received very positive feedback from the users. So it looks like we’ll do this for other dashboards in the future. To prepare for that eventuality, we just upgraded the memory in our enterprise BI servers.”

On a related topic, one of the experts interviewed for this report had this to add: “As memory chip density increases, the price comes down. Price alone keeps most server memory down to one terabyte or less today. But multi-terabyte server memory will be common in a few years.”

Want more? Register for my HiPer DW Webinar, coming up Oct.9 noon ET.

Read other blogs in this series:

Reasons for Developing HiPer DW

Opportunities for HiPer DW

The Four Dimensions of HiPer DW

Defining HiPer DW

High Performance: The Secret of Success and Survival

Posted by Philip Russom, Ph.D. on October 5, 20120 comments

By Philip Russom, TDWI Research Director

[NOTE -- My new TDWI report about High-Performance Data Warehousing (HiPer DW) is finished and will be published in October. The report’s Webinar will broadcast on October 9, 2012. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #HiPerDW to find other leaks. Enjoy!]

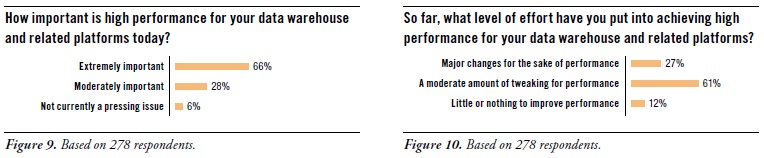

No one denies that HiPer DW is important. (See Figure 9. [shown above]) Two thirds of survey respondents called it extremely important (66%), while a quarter called it moderately important (28%). A mere 6% said that HiPer DW is not currently a pressing issue.

The wide majority of users surveyed are doing something about it. (See Figure 10. [shown above]) Luckily, most organizations can achieve their performance goals with a moderate amount of tweaking (61%). Even so, others have made major changes for the sake of performance (27%). Given that a third of user organizations are contemplating a change of platform to gain higher performance (as seen in Figure 7 [not shown in this blog]), more major changes are coming.

Whether major changes or moderate tweaking, there is a fair amount of work being done for the performance optimization of BI/DW/DI and analytic systems. To find out why, the survey asked: “Why do you need to invest time and money into performance enhancements?” (See Figure 11. [not shown in this blog])

Business needs optimal performance from systems for BI/DW/DI and analytics. This is clear from survey responses, such as: business practices demand faster and bigger BI and analytics (68%) and business strategy seeks maximum value from each system (19%). On the dark side of the issue, it’s sometimes true that [business] users’ expectations of performance are unrealistic (9%). In a similar vein, one response to “Other” said that “regulatory requirements demand timely reporting.”

Keeping pace with growth is a common reason for performance optimization. Considerable percentages of the experienced users responding to this survey question selected growth-related answers, such as scaling up to large data volumes (46%), scaling to greater analytic complexity (32%), and scaling to larger user communities with more reports (25%).

One way to keep pace with growth is to upgrade hardware. This is seen in the following responses: We keep adding more data without upgrading hardware (14%), and we keep adding users and applications without upgrading hardware (8%). Another way to put it is that adding more and heftier hardware is a tried-and-true method of optimization, though – when taken to extremes – it raises costs and dulls optimization skills.

Performance optimization occasionally compensates for tool deficiencies. Luckily, this is not too common. Very few respondents reported tool-related optimizations, such as: our BI and analytic tools are not high performance (15%), our database software is not high performance (6%), our BI and analytic tools do not take advantage of database software (4%), and our database software does not have features we need (3%). In other words, tools and platforms for BI/DW/DI and analytics perform adequately for the experienced users surveyed here. Their work in performance optimization most often targets new businesKeeping pace with growth is a common reason for performance optimization.s requirements and growing volumes of data, reports, and users – not tool and platform deficiencies.

EXPERT COMMENT -- Query optimizers do a lot of the work for us.

A database expert interviewed for this report said: “The query optimizer built into a vendor’s database management system can be a real life saver. But there’s also a lot of room for improvement. Most optimizers work best with well-written queries of modest size with predictable syntax. And that’s okay, because most queries fit that description today. However, as a wider range of people get into query-based analytics, query optimizers need to also improve poorly written queries. These can span hundreds of lines of complex SQL, with convoluted predicate structures, due to ad hoc methods, calling out to non-SQL procedures, or by mixing SQL from multiple hand-coded and tool-generated sources.”

Want more? Register for my HiPer DW Webinar, coming up Oct.9 noon ET.

Read other blogs in this series:

Opportunities for HiPer DW

The Four Dimensions of HiPer DW

Defining HiPer DW

High Performance: The Secret of Success and Survival

Posted by Philip Russom, Ph.D. on September 28, 20120 comments

By Philip Russom, TDWI Research Director

[NOTE -- My new TDWI report about High-Performance Data Warehousing (HiPer DW) is finished and will be published in October. The report’s Webinar will broadcast on October 9, 2012. In the meantime, I’ll leak a few of the report’s findings in this blog series. Search Twitter for #HiPerDW to find other leaks. Enjoy!]

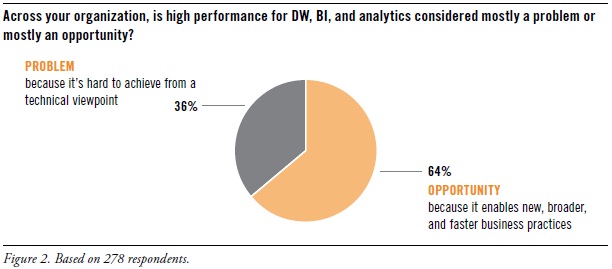

In recent years, TDWI has seen many user organizations adopt new vendor platforms and user best practices, which helped overcome some of the performance issues that dogged them for years, especial data volume scalability and real-time data movement for operational BI. With that progress in mind, a TDWI survey asked: “Across your organization, is high performance for DW, BI, and analytics considered mostly a problem or mostly an opportunity?” (See Figure 2, shown above.)

Two thirds (64%) consider high performance an opportunity. This positive assessment isn’t surprising, given the success of real-time practices like operational BI. Similarly, many user organizations have turned the corner on big data, no longer struggling to merely manage it, but instead leveraging its valuable information through exploratory or predictive analytics, to discover new facts about customers, markets, partners, costs, and operations.

Only a third (36%) consider high performance a problem. Unfortunately, some organizations still struggle to meet user expectations and service level agreements for queries, cubes, reports, and analytic workloads. Data volume alone is a show stopper for some organizations. Common performance bottlenecks center on loading large data volumes into a data warehouse, running reports that involve complex table joins, and presenting time-sensitive data to business managers.

BENEFITS OF HIGH-PERFORMANCE DATA WAREHOUSING

Analytic methods are the primary beneficiaries of high performance. Advanced analytics (mining, statistics, complex SQL; 62%) and big data for analytics (40%) top the list of practices most likely to benefit from high performance, with basic analysis (OLAP and its variants; 26%) not too far down the list. High performance is critical for analytic methods because they demand hefty system resources, they are evolving toward real-time response, and they are a rising priority for business users.

Real-time BI practices are also key beneficiaries of HiPer DW. High performance can assist practices that include a number of real-time functions, including operational business intelligence (37%), dashboards and performance management (34%), operational analytics (30%), and automated decisions for real-time processes (25%). Don’t forget: the incremental movement toward real-time operation is the most influential trend in BI today, in that it affects every layer of the BI/DW/DI and analytics technology stack, plus user practices.

System performance can contribute to business processes that rely on data or BI/DW/DI infrastructure. These include business decisions and strategies (33%), customer experience and service (21%), business performance and execution (19%), and data-driven corporate objectives (14%).

Enterprise business intelligence (EBI) needs all the performance help it can get. By definition, EBI involves thousands of users (most of them concurrent) and tens of thousands of reports (most refreshed on a 24-hour cycle). Given its size and complexity, EBI can be a performance problem. Yet, survey respondents don’t seem that concerned about EBI, with few respondents selecting EBI issues, such as standard reports (15%), supporting thousands of concurrent users (15%), and refreshing thousands of reports (12%).

Want more? Register for my HiPer DW Webinar, coming up Oct.9 noon ET.

Read other blogs in this series:

The Four Dimensions of HiPer DW

Defining HiPer DW

High Performance: The Secret of Success and Survival

Posted by Philip Russom, Ph.D. on September 21, 20120 comments