Who Needs A Data Model Anyway?

Will AI eliminate the need for data models?

- By Barry Devlin

- March 26, 2018

With data lakes offering to store raw data and promising schema-on-read access, data warehouses moving in-memory for vastly enhanced query performance, and even BI tools improving ease-of-use with artificial intelligence (AI), many in the IT industry are proclaiming the imminent death of the data model.

"A model is so limiting for innovative users," they say, "and building one is so terribly time-consuming. The idea is so last century. Let it fade away like the images on some old celluloid movie."

I beg to disagree. I don't dispute that data models are changing dramatically or that new ways of building and using them must emerge. However, the term data model includes three different concepts (application-scope modeling, information structuring and simplification, and enterprise data models) and we must examine each to see how the ongoing evolution in information and process will re-create the data model landscape.

Furthermore, as discussed in a previous Upside article, data modeling occurs at three levels -- conceptual, logical, and physical. Of these, only the physical level is directly affected by technology change, although the implications of AI for the other two levels require deeper consideration than we have heretofore seen.

Keep in mind that each of these flavors of data model serves a different purpose and audience, so the usefulness and prospects of each in today's information and process technology environment must be considered separately. In addition, the sources of the data under consideration play a role in this evaluation. Internally sourced data -- process mediated data, as I have described it in Business unIntelligence -- is typically well-defined and well-structured but often semantically complex in comparison to externally sourced data.

Application-Scope Modeling

All three concepts do indeed have their roots in the last millennium. Application-scope modeling was the first incarnation, introduced in 1976 by Chen with the original entity-relationship diagram. The aim was to allow "important semantic information about the real world" to be incorporated into the then-emerging databases in the design of operational applications. The important characteristic of such modeling is that it is local in scope, driven by the specific needs of a particular business function. It applies to operational systems as well as to limited-scope informational systems, such as data marts.

Modern improvements in database performance and data storage methods certainly reduce -- and, in some cases, eliminate -- the need for physical data modeling. However, conceptual- and logical-level modeling become even more important as enterprises incorporate ever more external data into applications and must align it with internally sourced operational and reference data in all forms of analytics.

Information Structuring and Simplification for Business Use

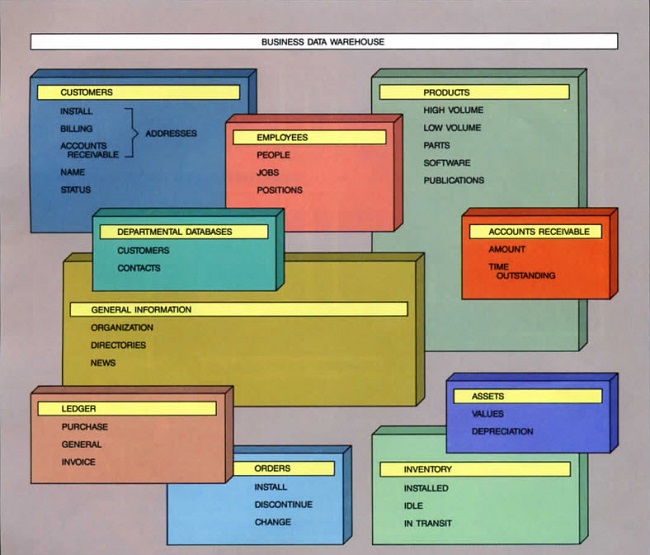

An early example from the first data warehouse architecture is shown in Figure 1. This simple picture shows major areas of interest to business people in terms they would understand. This view is broader than a single application and, although not explicitly stated, it has the potential to be used across an entire enterprise. The model does not say anything about how these areas are sourced or calculated, offering instead a simple business-level view of information structure that Bill Inmon later called subject oriented.

Figure 1: Example of contents of a business data warehouse.

This conceptual-level model -- often expressed in modern business catalog and glossary components -- is more vital than ever because of the explosion of externally sourced information -- often poorly characterized -- and the need to empower all business users to use such external information daily in the context of traditional business concepts of customer and product. It is also relatively easy and inexpensive to create and maintain, given its high-level nature. Eliminating this model would cost much in business understanding and save little in its ongoing maintenance.

Enterprise Data Model

Described by Zachman and Sowa in 1992, the enterprise data model (EDM) takes the enterprisewide, simple business-level view above and applies a formal modeling technique such as the entity-relationship approach to it. It is mainly used by data management professionals because of its complexity. However, the EDM is the foundation for all enterprise data integration projects, such as data warehousing and master data management, and an important element in data governance.

Digital transformation is undoubtedly the most significant enterprisewide change that business has encountered since its initial computerization in the 1950s. To even consider dropping the conceptual and logical EDM in such circumstances would be tantamount to negligence. Data and information are at the heart of digital transformation and getting them right is vital to becoming data driven or -- as I would prefer to call it -- information informed.

AI and Data Models

In all these endeavors, AI promises to automate the drudgery and augment the human skills of data archaeology -- the process of excavating the meanings and relationships within both long-buried and newly discovered data. These skills span the business-IT divide, joining the innovative world of business needs to the structured and formal landscape of semantics and data. In reality, these are the skills we have truly needed -- and sometimes find -- in data modelers.

Data modeling is far from dead. In fact, the tools and techniques are undergoing a quiet renaissance. Successful enterprises will be those that adopt them in breadth and at depth.

About the Author

Dr. Barry Devlin is among the foremost authorities on business insight and one of the founders of data warehousing in 1988. With over 40 years of IT experience, including 20 years with IBM as a Distinguished Engineer, he is a widely respected analyst, consultant, lecturer, and author of “Data Warehouse -- from Architecture to Implementation" and "Business unIntelligence--Insight and Innovation beyond Analytics and Big Data" as well as numerous white papers. As founder and principal of 9sight Consulting, Devlin develops new architectural models and provides international, strategic thought leadership from Cornwall. His latest book, "Cloud Data Warehousing, Volume I: Architecting Data Warehouse, Lakehouse, Mesh, and Fabric," is now available.